We've just finished getting PCI compliance for our SaaS offering at work. One of the interesting things around this is the need for Multi-Factor Authentication (I'm going to use the abbreviation MFA for this going forward). For those who don't know what MFA is, essentially it means that you need another secret to login besides your password. Something like a code that gets SMS'd to your phone.

Now, this doesn't sound very contentious. Obviously MFA is more secure than SFA (Single Factor Authentication, i.e. your password or, even better, passphrase). But the debate has been really how to do it correctly.

The normal flow MFA would be:

- Challenge for username and password

- Check username and password, if either incorrect, reject login.

- Prompt for MFA code.

- Check MFA code, if valid, allow login.

Simple right? Well this is where things get a bit sticky. This design stems from three restrictions of UX when it comes to public facing websites.

- Typically on a public facing webpage, the MFA is down using SMS. In order for the site to send you the code, it needs to know a number to send it to you. It also needs to know when to actually send the code. So it makes sense to send the code and challenge for it after the login and password has been accepted.

- MFA is normally not mandatory. While systems will push MFA onto you (I'm looking at you Apple), there are few public pages which force you to have MFA in order to use them.

- As MFA causes friction in the sign-in process, a lot of sites only challenge people periodically, for instance every 30 days.

Given these 3 restrictions this pattern makes complete sense, so what's the problem? Here's the rub, there are a couple of security implications to this pattern.

Complication of State

Normally there is a binary state to authentication of a user in most models. She is either authenticated or not. This makes things clean for your trust model. Now we've introduced a new state, what state is the user between 2 and 3? This is where MFA bypass attacks come into play.

The user needs to access certain end points to allow them to request a new code however they're obviously not allowed to go straight to the main page (otherwise it wouldn't actually be MFA).

We've complicated our model by introducing this state, and we need to make sure that state is sandboxed appropriately. In theory this should be fairly straightforward but in practice things can get messy and have given rise to the MFA bypass attacks.

Integration tests should make sure that tests are run against all the end points within the API assuming the state of a user who has completed their credentials but not entered their MFA token. It's important that these tests are complete and done automatically as part of your integration process. It's easy to start with the best intentions and then simply forget 6-8 months down the line.

Information Leak

Go to your favourite website and put in your username and an incorrect password. Look at the error message. Now put in a incorrect username and a valid password. Check out the error again, they're the same right? That's because it's not leaking information. By that I mean the website isn't allowing an attack to know that they have guessed either the username or password correctly.

With the pattern described above, we are leaking that the login and password are correct before they have fully authenticated. This really is an information leak but it's unavoidable with the approach defined above.

A Better Approach?

OK so we see the issues and it begs the question, is there anyway of implementing a flow without these issues? The problem with this flow stems from the restrictions rather than flow it's self.

Alright then, so if the problem is with the restrictions, are we sure they still apply? Excellent question! Let's explore that further with looking at some common vectors of attack like Vevo and Deloitte.

Alright then, so if the problem is with the restrictions, are we sure they still apply? Excellent question! Let's explore that further with looking at some common vectors of attack like Vevo and Deloitte.

Scenario 1 - Spear Phishing

So you're cruising the web.

Feeling good.

Then you get an email from LinkedIN about someone desperate to give you a fantastic new job paying millions of dollars.

Naturally, as you're a remarkable, and yet strangely chronically under valued, employee at your current job in EvilCorp, you eagerly hit that link to read more.

You're prompted for your login details.

You think that's weird as normally your browser logins you in automatically but, whatever.

You put in your login and password.

And it turns out it's from a Nigerian Prince.

You shrug your shoulders and go on with your life.

Meanwhile in some evil lair unbeknownst to yourself, an hacker has stirred from their slumber. They have caught you in their web. They now have your login and password for LinkedIN. While I am sure that your LinkedIN profile is infinitely valuable to you, it's not really what they're looking for.

They have their eyes set on EvilCorp whom has chronically undervalued and underpaid you. They know your email and as 99% of usernames are either email address or the first part of the email address, they are confident they have username.

So that leads to the next variable, do you practice good password hygiene? Are you using different passwords for every service or is "P0w3rR4ng3rsRG0!" (great choice for a password BTW) that you used for LinkedIN your go to password for everything?

They go to Evil Corps page and with bated breath they enter your email address followed by "P0w3rR4ng3rsRG0!", and wait......Getting tense isn't it? Bingo they're presented challenge for MFA.

They now have validated your company login and password without needing to use the MFA. They are now armed with more (valuable) information then they had to begin with. Hence information leak.

Scenario 2 - Brute Force

Peter is the next haxx0ring legend.

He just needs to prove it.

He notices on facebook that you're working for EvilCorp.

He takes a guess that your company email is probably <first letter of your first name><your surname>@evilcorp.com.

While Peter has determination, he isn't very sophisticated (yet).

He decides to try bruteforcing your password.

After a few hours and many forwarding boxes later, he hits pay dirt.

Whilst "sexgod" is an apt title for yourself, it might not have been the best password.

Now he is prompted for your MFA.

Now both these scenarios don't end up with you being owned, but they do show that with the established pattern that the password has validated / found. Now it's simply a case of finding a way of reseting the MFA, or looking for MFA bypass.

Breaking the Restrictions.

OK, so now we have scenarios, let's look at those scenarios again. But instead of looking at them as a webapp catering the the wit and whimsy of the public. Let's look at them from the more structured world of corporate webapps.

- Using SMS for MFA. The MFA code that gets sent when a user logs in is actually generated using a well-known and more important standard algorithm. You can checkout the RFC here. These days there are many really excellent a free Authenticators, Google Authenticator for Android, Microsoft Authenticator for iOS, 1Password has a great implementation for Windows and OS X. All these authenticators are designed for MFA and use stand QR to set up. It takes less then 10 seconds to setup. There really isn't any reason to use SMS to deliver an MFA code these days.

- There is no reason why MFA should not be a mandatory across your company's infrastructure. MFA is easy to setup and offers a huge pay off from a security perspective.

- Using something like 1Password makes this really trivial and less of a burden. It's not totally removed but considering the security pay off the pain really is minimal.

Obviously you need to have these discussions internally. But removing even one of these restrictions removes the information leak or reduces it significantly. Let's look a few new approaches based on lifting of the differing restrictions.

1. Removing All Restrictions.

OK, This is easy:

- Challenge for username, password and MFA

- Check username and password and MFA, if any are incorrect, reject login.

- Allow the user to enter.

As you can see this completely removes the complication of the state and mitigates both of the attack scenarios as the attacker has no idea if the password is correct or not without first knowing the MFA. We've doubled the amount of unknown variables he has to correct guess before gaining anymore information.

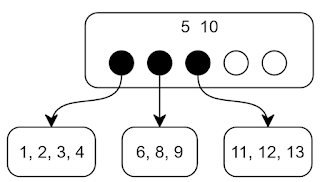

2. Removing SMS for MFA

This is a little more tricky as, obviously, we now have to deal with a mix of MFA and non-MFA users. So this would look like:

- Challenge for username and password.

- Check if the username needs MFA (do not check the password yet).

- If the username doesn't exist, prompt for MFA

- If the username does exist and the user needs MFA, prompt for MFA

- If the username does exit and the user doesn't need MFA, Go to 5.

- Prompt for MFA.

- Check the password and the MFA, if either of them is invalid, reject login.

- Allow the user to enter

Here we have completely removed the complication of state. However, we still do have an information leak. Let's break it down a bit.

If the attacker doesn't know the username or password what will he learn? He learns nothing, as we prompt for the MFA token if the username doesn't exist so there is no way of knowing if the user exists and needs MFA or if the user doesn't exist.

If the attacker knows the username (which these days if very likely as it's normally email based) and has an idea of the password. Here if the user needs MFA, he learns nothing, as we check both the MFA token and the password at the same time and if either of them is wrong we reject the login. He has no way of knowing which one he got wrong.

If the attacker knows the username and password. He can't look for MFA bypass as we do not have any half-authenticated states (i.e. logged in but not answered the MFA challenge).

Conclusions

When we looked at the restrictions we went with the approach with the lifting of the SMS for MFA restriction. This meant we could have a security model which is stronger than the typical design pattern.

While you should always attempt to go for common patterns whenever possible. This does show that it's important to understand why those patterns are being used the fist place. In the case of MFA pattern, it was a design which was created for the public in mind and minimum requirements needed for buy-in. In our case and a lot of cases for corporate webapps the restrictions which were placed on original pattern aren't there.

Going down the well-traveled road is the best choice in software most of the time. However, don't blindly follow it. I see this coming up a lot when people start talking about their stacks (MongoDB, Redis, Node.js) and when I ask them why they picked those over other approaches it's sometimes difficult to get a real answer. The patterns we implement and the services we put into a stack have massive repercussions for years down the road. It's a lot cheaper and more effective to spent time thinking about them now, then trying to untangle them later down the path.